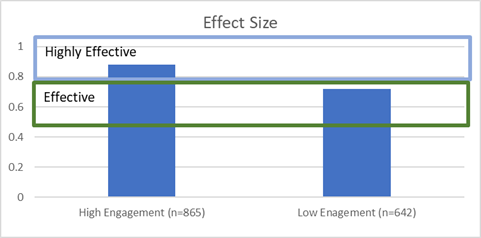

Using a General Linear Model to control for the assessment count for each case, the therapist’s SAES in year one, and the therapist’s level of engagement year two, we found a strong trend for the effect of engagement in year two (p<.10) despite controlling for these other variables.

Taken together, these results provide additional evidence that those clinicians who are actively engaged in receiving feedback are likely to deliver more effective services regardless of the number of sessions. This difference is large and clinically meaningful. Consistent with findings using the entire ACORN sample of therapists, it also appears that engagement in seeking feedback is associated with improvement over time.

Discussion and Implications for the Future

The number of clinicians participating actively in the use of the ACORN Toolkit increased significantly during 2019 as part of a quality improvement initiative spear headed by the MBHP and local school district, with active support with Elizabeth Conners, PhD at Yale University.

Unfortunately, funding was discontinued in 2020 when the MBHP lost the contract to manage this population in Maryland. Fortunately, five of the seven treatment organizations participating in the collaboration continued at their own expense, without the support from the MBHP. The gains in effect size observed in 2019 were largely maintained during 2020, however; due to the impact of COVID-19, the sample sizes of therapists and clients were smaller, and were not included in this report.

The ACORN collaboration continues to seek out funding to support these agencies as students begin to return to school and therapists once again can provide the full range of school-based services.

Summary

The data provide strong evidence of the effectiveness of school-based mental health services and supports the hypothesis that measurement and feedback can increase the effectiveness of these services. Now, perhaps more than ever, there should be a focus on improving the outcomes of children's mental health. We may never know the extent of the impact the pandemic has had on children’s mental health. However, we have the opportunity to track and in return improve students' mental health outcomes by implementing feedback informed treatment