G. S. (Jeb) Brown, PhD, Center for Clinical Informatics

Ashley Simon, Center for Clinical Informatics

Christophe Cazauvieilh, PhD, University of Bordeaux, France

Published on the Society for the Advancement of Psychotherapy

Abstract

This paper presents analyses of outcome data for 615 clinicians treating 107,194 patients over a three-year period to determine if clinicians’ mean effect sizes increased over time. A standardized measure client global distress was used to measure patient improvement over the course of therapy. Improvement is reported in a statistic known as a severity adjusted effect size (SAES), which was calculated for each patient using intake scores and a diagnostic group as predictors in a multivariate regression model. A mean SAES for each clinician was calculated at a two-year baseline period and during a 12-month follow-up period of using the outcome tools. A hierarchical linear model was used to control for sample size in each year, with a minimum sample of 30 cases in the baseline period and 15 cases the follow-up period. Clinician engagement in receiving feedback was also measured by counting the number of times the clinician logged into the online platform to view their results during the follow-up period. Clinicians were grouped into high and low engagement based on a median split in the number of logins in the follow-up period. Clinicians who logged in to view their data at least 556 times were considered in the high engagement group in the follow-up year (n=308; 50%). This group averaged .96 SAES compared to .85 mean SAES for those seen by clinicians who reviewed their results less frequently (n=307; 50%). The login count during the follow-up period correlated significantly with the increase in mean effect size between the baseline and follow-up period (Pearson r=.15, p<.0005), and .20 (p<0001) with the mean SAES during the follow-up period. Login frequency during the two-year baseline period was not predictive of effect size during the second year (Pearson r=.05). This dataset provides evidence that clinician engagement in receiving feedback on questionnaire results is associated with significantly larger effect sizes as well as increases in effect size over time. However, the mechanism for this effect is unclear. Clinician engagement was measured simply by counting a behavior, specifically how often the clinician logged into the website to view their data. We have reached out to ACORN participants to formulate a clinician completed questionnaire which will be used explore the clinicians’ understanding of the value to of the information and how it is used in the clinical work. Preliminary data is already being collected. This is necessary next step in further understanding how to best to assist clinicians to improve their personal effectiveness.

Purpose

The practice of routine measurement and feedback is often referred to as feedback informed care/treatment. The purpose of this study is the further the exploration of the relationship between clinicians receiving ongoing feedback on progress and risk indictors individual cases and improvement in their results over time. The dataset is the largest reported to date, both in the number of clinicians (n=615) and clients (n=107,194).

Goodyear et al. (2017) argued for a definition of clinician expertise that included both evidence of effective treatment and the ability to improve results over time. While there is ample evidence that routine measurement and feedback improves outcomes in general, primarily by reducing premature dropout of patients faring poorly in treatment, it is much less clear that individual clinicians will improve their results over time. In fact, until quite recently the evidence would suggest just the opposite: that there is not evidence that clinicians become more effective over time. To quote from Wampold & Imel (2017):

“Clinicians do not get better with time or experience. That is, over the course of the professional careers, on average, it appears that clinicians do not improve, if by improvement we mean achieve better outcomes.”

Over the past decade the ACORN collaboration developed and validated a methodology for benchmarking behavioral health treatment outcomes as measured by pre-post change on a variety of client/patient completed outcome questionnaires assessing symptoms of depression, anxiety, social conflict/isolation, functioning in daily tasks, and other symptoms treated through outpatient behavioral health services. The benchmarking methodology makes use of a standardized change score (Cohen’s d) commonly referred to as effect size, permitting combining results from multiple questionnaires providing that the questionnaires share a common underlying construct as determined using factor analysis (Cohen, J., 1988; Minami et al, 2007; Miami et al, 2008a; Minami et al, 2008b; Minami et al, 2009; Minami et al, 2012).

Clinician engagement was measured by the frequency with which clinicians logged into the ACORN Toolkit to view their data. Client data consists of clinical summaries of treatment generated by predictive algorithms as well as progress over treatment, as shown in client graphs.

Method

QUESTIONNAIRES

The ACORN collaboration participants used a variety of questionnaires, depending on the treatment population in question and the wishes of the various clinics. Prior analyses revealed that the various questionnaire items load on a common factor, often referred to the global distress factor. This common factor permits the results of various questionnaires to be converted to a standardized measure of change referred to as effect size. The ACORN collaboration developed an advanced form of this statistic that also accounts for differences in questionnaires and case mix, the severity adjusted effect size (SAES). Use of this statistic is an important step when comparing results across different clinicians (Brown et al., 2015a; Brown et al., 2015b).

SAMPLE SELECTION CRITERIA

The sample of clinicians was identified based on two criteria. One, there needed to a sample of at least 30 clinical range cases that included multiple assessments and a final assessment between January 1, 2017 and December 31, 2018, the baseline period. Clinical range cases are defined as cases with intake scores above the 25th percentile for the reference population. This permits comparison of results to those from published clinical trials. Secondly, the clinician had at least 15 similar cases in the 12 months following, January 1, 2019 to December 31, 2019, which is considered the follow-up period. Later dates were not employed in this study due to the impact of COVID and disruption of clinician services.

A total of 615 clinicians met these criteria.

CALCULATION OF EFFECTIVENESS

The advanced ACORN methodology for benchmarking clinician results creates a statistic referred to as the SAES. Calculation of SAES uses a general linear model regression to control for differences in severity of distress at intake, diagnostic group, and session number of the first assessment of the treatment episode (Brown, et al, 2015a). When comparing results between clinicians or agencies, the use of Hierarchical Linear Modeling accounts of differences in sample size and permits an estimate of the true distribution of clinician effect sizes. For a more complete explanation of these methods, see Are You Any Good as a Clinician? (Brown, Simon & Minami, 2015b).

The mean SAES for this sample of clinicians was .86 (SD=.23) for the baseline period, and .90 (SD=.25) for the follow-up period, a difference that is statistically significant. (p<.001, one-tailed t-test). The total number of cases included in this sample was 107,194. By way of comparison, the mean SAES for all cases in the ACORN database during the full three-year period was .84 (n=141,386). This suggests that the subset of clinicians that met the selection criteria for this study tended to have better results in general than the broad population of clinicians using the platform.

As expected, the clinicians estimated mean SAES during baseline was significantly correlated with their mean SAES during follow-up (Pearson r=.69, p<.0001).

The clinician sample was divided into four quartiles based on measured effectiveness during the baseline period:

Low effectiveness (lower quartile; SAES < .71; n=158)

Average effectiveness (middle two quartiles, .71 =< SAES =< .98; n=308)

High effectiveness (results in upper quartile; SAES >.98; n=149)

MEASURING ENGAGEMENT

Engagement in feedback informed care was estimated by how frequently clinicians logged into the platform during the follow-up period. The range of the number of logins in the follow-up period was quite large, from 0 to multiple thousands, with a mean of 1028 logins and a median of 556.

In order to simplify the presentation of results, a median split was used to break clinicians into a low engagement group and high engagement group, based on at least 556 logins in the follow-up period.

Results

Table one displays the mean SAES and standard deviations during the follow-up period for each of the two engagement groups (p<.001, one tailed t-test).

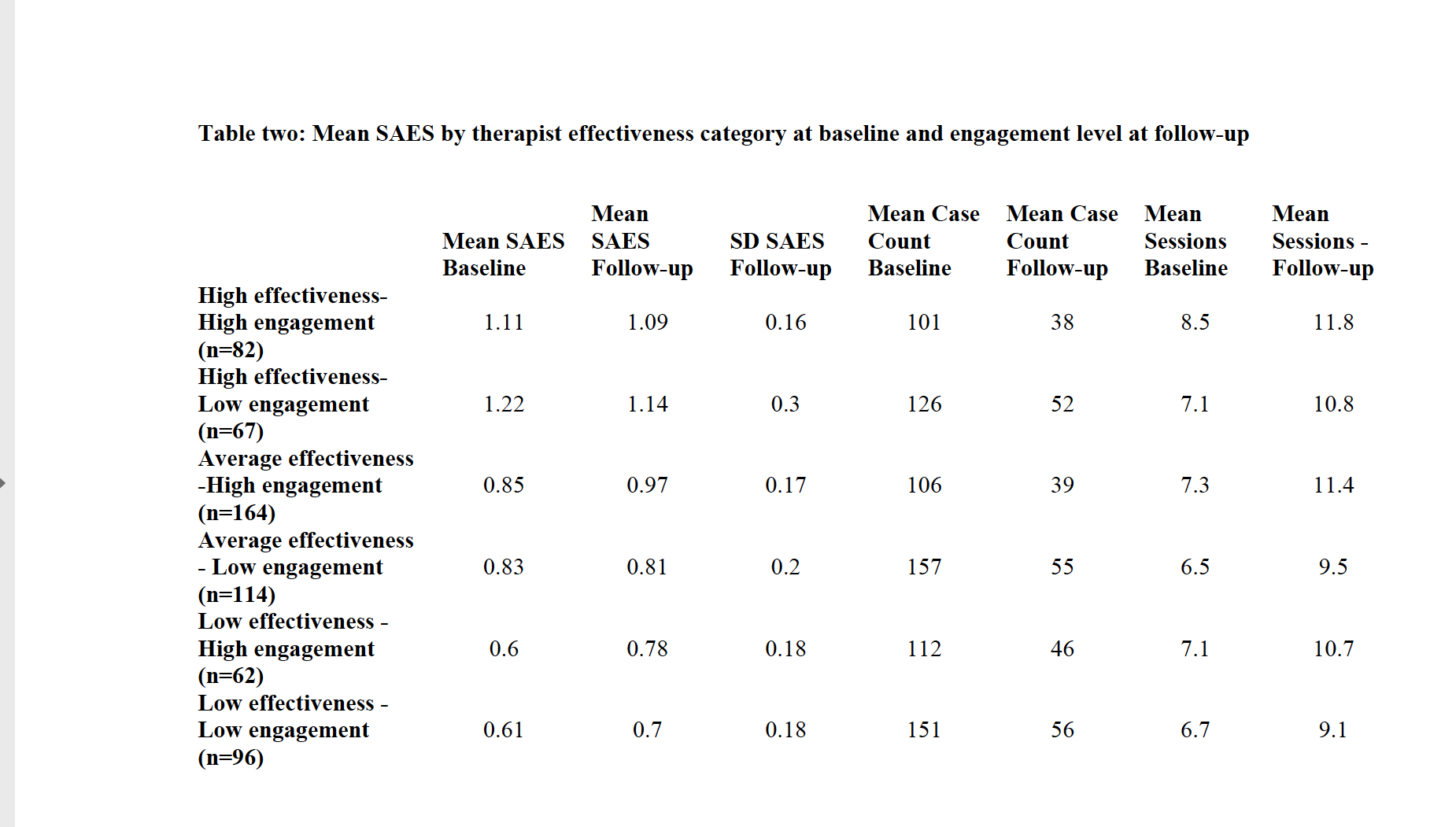

In addition to engagement level, three variables were hypothesized to possibly be associated with the increase in mean SAES: SAES at baseline, mean number of cases and mean number of sessions per case. Table two displays the mean SAES, case count, and mean number of assessments per case by effectiveness category at baseline and engagement level at follow-up.

The mean number of cases per clinician was not a significant predictor of increased mean SAES. However, as displayed in Table 3, the mean number of assessments per case increased between the baseline and follow-up period. The mean increases in assessments per case were correlated with increases in mean SAES at the clinician level (Pearson r=.20, p<.001). However, this increase occurred for both high and low engagement clinicians and did not explain the difference in outcomes between these two groups of clinicians. The correlation between the increase in session count and the login count was non-significant (Pearson r=.06).

Chart one presents the estimated distribution SAES for clinicians grouped by effectiveness at baseline and engagement at follow-up.

Chart 1

The increased effect size as a function of engagement was not evident for the highly effective group. However, the differences between high and low engagement clinicians for the average and low effectiveness groups were significant (p<.01, one tailed t-test).

Of course, those clinicians in the upper quartile during baseline have less room to improve than those in the lower quartiles. After adjusting for the clinicians mean SAES during the baseline period, the Pearson r correlation between increase in SAES at follow-up and the number of logins in the follow-up period was .15 (p<.0005). The correlation between the login count and mean SAES at during the follow-up period was .19 (p<0001).

The reasons for this increase in assessments per case are also not clear. Ideally, each assessment represents a single session. However, inconsistency in data collection can result in the assessment count underrepresenting the count of sessions in the episode of care. During this study period, there was a trend for clinics to migrate from using paper forms that were faxed in for data entry to asking clients to complete questionnaires on browser enabled portable devices. It is possible that this resulted in improved consistency of data collection and an increase in average number of assessments per case.

Summary

This dataset provides evidence that clinician engagement in receiving feedback on questionnaire results is associated with significantly larger effect sizes as well as increases in effect size over time. However, the mechanism for this effect is unclear. Clinician engagement was measured simply by counting a behavior (i.e., how often did the clinician log into the website to view their data). We have reached out to ACORN participants to formulate a clinician completed questionnaire which will be used explore the clinicians’ understanding of the value to of the information and how it is used in the clinical work. Preliminary data is already being collected. This is necessary next step in further understanding how to best to assist clinicians to improve their personal effectiveness.